Individual pixels in CCD cameras record only light and dark, black and white. They

don't see color. To produce a color image requires taking three separate monochrome

(black and white) images though individual red, green, and blue filters. These three

black and white images, each representing a single color of light (red, green, or

blue), are then combined in your computer to produce the final full-color image.

Most CCD cameras take the three filtered images sequentially and store them in the

computer for later processing, with the operator changing color filters between

each exposure. However, several CCD manufacturers offer single-shot color cameras

that record all three color images at the same time, in a single exposure. These

cameras are also available in conventional monochrome versions. The single-shot

color CCD cameras are essentially identical to their monochrome counterparts with

the exception of the addition of a permanent color filter matrix over the pixels

that lets them take all three color images simultaneously, as explained below.

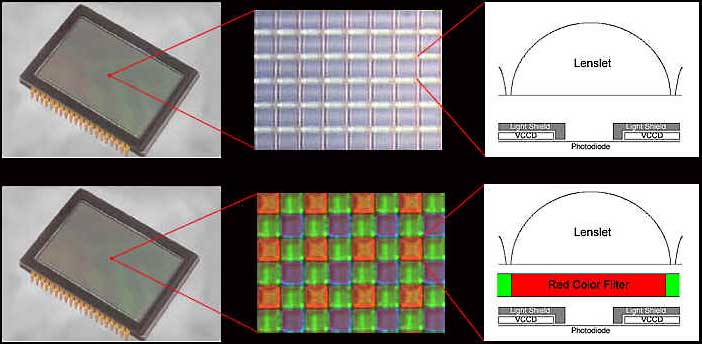

The images in the box above show the basic structure of the pixels on a Kodak CCD

detector, such as used on high-end SBIG and Finger Lakes Instrumentation single-shot

color cameras. The top row shows a monochrome detector, the bottom row shows a single-shot

color detector. The center image in each row is an actual photograph of the surface

of the CCD showing a small section of the pixel array. The drawings at the right

depict a side view of an individual pixel.

As you can see from the bottom row of images, the CCD structure for the single-shot

color version is the same as the monochrome version except for the red, green, and

blue pattern of filters over the pixels. The arrangement of colored filters over

the pixels in a single-shot color camera is a repeating square of RGGB known as

a Bayer pattern. This repeating pattern of RGGB pixels allows the separate red,

green and blue data to be collected in a single monochrome exposure and electronically

separated into the three monochrome images your computer needs to reconstruct a

full-color image. Every fourth pixel sees red, every fourth pixel sees blue and

every other pixel sees green. Special software extrapolates the RGB color data for

each individual pixel in the frame from the color information in the adjacent colored

pixels.

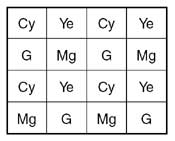

Many of the more economical cameras from Celestron, Meade, and Orion use Sony CCD

detectors primarily designed for general use in camcorders and other consumer electronics,

rather than the more-specialized detectors from Kodak. The Sony detectors use a

color filter matrix of yellow, cyan, magenta, and green filters in a repeating sequence

to generate the full color spectrum using sophisticated addition and subtraction

algorithms to generate the desired RBG signal. The Sony filter matrix pattern is

shown below.

What are the differences between taking three separate exposures versus one? Primarily

it is a trade-off between greater complexity, sensitivity, and flexibility at a

higher cost for the monochrome camera versus the single-shot color camera's simplicity,

ease of use, and lower overall cost for color imaging. A single-shot color camera

needs only one image to do the job of the three needed by a monochrome camera/color

filter wheel system. While this is simpler and less time-consuming, it results in

a difference in the amount and quality of data recorded by each camera. The final

image from a single-shot color camera has the same number of total pixels as a color

image created by a monochrome camera and external filters, but it is created from

less original data than the three discrete images of a monochrome camera. In addition,

only one-third of the color information for each pixel is unique to that pixel and

measured directly. The other two color values are approximations, derived from adjacent

pixels.

In the case of a monochrome camera, the external color filters can be designed specifically

for astronomical use, with high light transmission, precisely tailored response

curves, and with better control of the color balance between the emission line and

continuum light for different deep space objects. There is no way to tailor the

sensitivity and spectral response of each color filter in the matrix to match the

emissions of the object you are imaging, or to use special purpose narrowband filters,

such as Oxygen III, SII ionized sulfur, H-alpha, etc. The matrix filters are general

purpose red, green, and blue filters only.

As far as sensitivity is concerned, the monochrome camera is somewhat more sensitive

due mainly to the nature of the external filters compared to the micro-filters placed

over each pixel in the single-shot color camera. The monochrome camera requires

more work to take a tri-color image, however, and the addition of the required filters

and color filter wheel makes it more expensive.

The effective QE (quantum efficiency) of the monochrome camera with external filters

is slightly higher than the single-shot color camera based on the filter transmission

characteristics. But remember, the monochrome camera must take three frames versus

the single-shot color camera's single frame. So for a proper comparison, a monochrome

camera taking a 20 minute image through each of the three filters should be compared

to a single-shot color camera taking a single 60 minute image. In this case, the

single-shot color camera compares very well to its monochrome counterpart. Moreover,

self-guiding the single-shot color camera is easier due to the fact that the separate

built-in guider detector is never covered by a filter which can affect the tracking

performance of the guider. Where a monochrome camera shines is in taking a grayscale

image, or in taking narrow band monochrome or tri-color images of emission line

objects. But for simple color images, single-shot color cameras are very capable.